In order to fully understand Search Engine Optimization (SEO), you need to know a lot about how Google’s searching process works. In this investigation, we get to the heart of SEO by learning “How Search Works” and taking apart Google’s complicated search systems. Website owners, marketers, and SEO experts who want to boost their sites exposure have to understand how Google’s web crawlers find and store content. By explaining the details of Google’s crawling process, we give people the tools they need to optimize their websites well, make them easier to find, and eventually take their online presence to new heights. We break down the basic ideas behind Google’s crawling process and start our search for SEO perfection.

Google Engineer Details Crawling Process

Find out how Google does its crawling right from the source. A Google engineer goes into great detail about how Googlebot crawls the web, stores content, and finds new pages. Understanding these technology issues is important for making sure that search engines can crawl and index your website.

Role of Google Engineers

Engineers at Google are very important when it comes to building and managing the systems that Google uses for crawling. Because they know a lot about algorithms and system design, they are always making Googlebot better at crawling and indexing web content quickly and correctly.

Technical Aspects Unveiled

Learn more about how Google workers describe the technical details of their crawling process. Learn how Googlebot crawls websites, decides which pages to index first, and deals with problems it comes across during the search process. To improve website layout and search engine visibility, you need to understand these subtleties.

Enhancing Crawl Efficiency

Listen to Google experts talk about ways to make crawling faster and make your web content easier to find. Look into ways to make website design better, internal linking structures better, and crawl budget waste as little as possible. By following these best practices, you can make your website much easier for search engines to crawl and find.

Future Directions and Innovations

Get a look at how Google engineers think the search process will change in the future. Explore ongoing research aimed at improving crawling speed and handling dynamic content. Stay informed to anticipate upcoming changes and adapt to evolving web technologies. Website owners and SEO experts can maintain a competitive edge by understanding future directions in search engine algorithms.

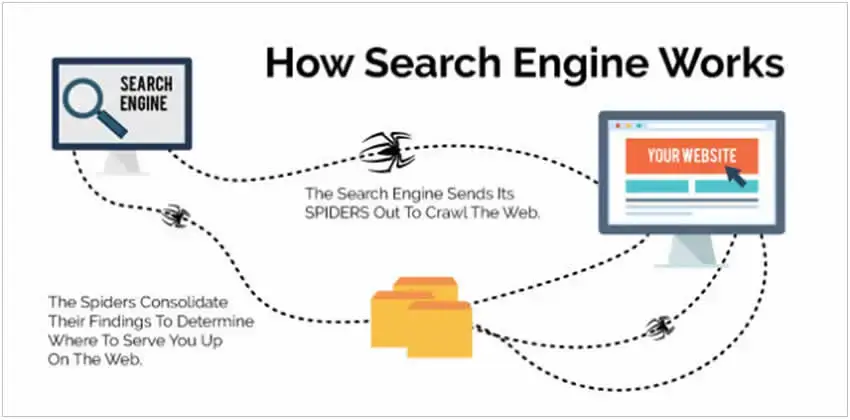

How Googlebot Crawls the Web

Find out all the complicated ways that Googlebot gets around the huge internet. Learn about Google’s search process, such as how Googlebot finds web pages and decides which ones to index first. Understanding these processes is important for making sure that your website’s structure is optimized and that it shows up high on search engine results pages.

Overview of Googlebot

Googlebot systematically navigates the internet, indexing web pages for search. Understanding its operations is vital for website owners and SEO professionals aiming to boost rankings. Familiarity with Googlebot’s functions aids in optimizing online visibility and presence.

Crawling Frequency and Depth

Googlebot decides how often and deeply to crawl a website based on things like how authoritative it is, how fresh the content is, and how quickly the server responds. Crawls happen more often and more deeply on websites with good content and strong backlinks. This makes indexing go faster and improves search engine exposure.

Prioritization of Pages

Googlebot uses complex algorithms to decide which pages to crawl and index first based on things like how relevant they are, how authoritative they are, and how engaged their users are. Website owners can make their content and internal linking structure more visible in search results by learning how Googlebot decides which web pages are important.

Handling Dynamic Content

Googlebot is very good at working with dynamic content like pages that are created in JavaScript and apps that are driven by AJAX. It can run JavaScript and talk to web elements so that it can crawl and index dynamic material correctly. To ensure complete indexing and search engine exposure, you need to know how Googlebot moves through and understands dynamic web pages.

Improving Discovery & Google’s Crawling

Strategic improvements can help people find and crawl your website better. By following best practices, you can make sure that search engine crawlers can find and process your content correctly. This will make your site more visible in search results and bring you more organic traffic.

Avoid Crawl Budget Exhaustion

Avoid wasting crawl budget by giving important pages more attention, reducing duplicate content, and improving server reaction times. You can make sure that search engine crawlers focus on indexing your most valuable content by handling your crawl budget well.

Implement Good Internal Linking

Make your website’s internal linking structure stronger so that both users and search engine crawlers can find their way around it easily. We have to make sure that relevant anchor text is used to connect important pages, building a logical hierarchy that helps spread link equity and makes it easier for crawlers to find.

Make Sure Pages Load Quickly

Cut down on server response times, picture compression, and scripts that aren’t needed to make your website load faster. Not only do pages that load faster make the user experience better, but they also encourage search engine crawlers to visit more sites within the time limit they are given.

Eliminate Soft 404 Errors

Identify and rectify soft 404 errors to prevent search engine crawlers from indexing irrelevant pages. Ensure error pages and redirects return appropriate HTTP status codes for proper crawling.

Consider Robots.txt Tweaks

Make small changes to your robots.txt file to limit search engine crawlers’ access to certain parts of your website. Strategically use robots.txt instructions to make sure that important pages are crawled first and that low-value or sensitive content isn’t accessed. This will help you get the most out of your crawl budget and make the site easier to crawl overall.

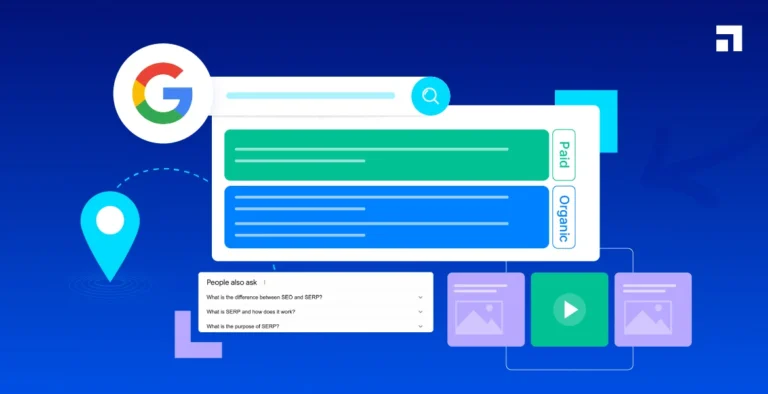

Google Guideline About “How Search Works”

In its recommendations, Google goes into great detail about the basic rules of how search engines work. Website owners and SEO professionals who want to improve their search engine rankings and optimize their online presence need to understand these rules. By following Google’s rules, you can make sure that your website is crawled, stored, and shown in search results correctly.

Core Principles of Search

Google’s guidelines elucidate key concepts of search engine functionality, emphasizing relevance and freshness. Aligning strategies with these principles enhances visibility in search results. Prioritizing high-quality content and user experience aligns with Google’s guidelines, bolstering website visibility.

Importance of Quality Content

Google stresses how important it is to create original, high-quality material that users will find useful. Website owners can get free traffic and move up in the search engine results by focusing on making content that is useful, interesting, and meets users’ needs. For long-term success in SEO, you must have high-quality material.

User Experience and Accessibility

Google prioritizes user-friendly websites, including those accessible to all users, including those with disabilities. To boost user satisfaction and search rankings, website owners should ensure easy navigation, fast loading times, and mobile compatibility. Accessibility and user experience are important parts of SEO tactics that work.

Transparency and Ethical Practices

Google wants website optimization to be honest and follow ethical standards. They don’t like tricks or deceptive methods. Google’s Crawling Guidelines say that website owners should not do things like term stuffing, cloaking, and link schemes. Being honest and doing the right thing earns the trust of both users and search engines, which leads to long-lasting SEO results.

What is the Crawling Process as Described by Google?

Google’s description of crawling clarifies how web crawlers navigate the internet and index pages. Understanding this is crucial for website owners and SEO experts aiming to enhance online presence. It provides insights into optimizing content for better search engine visibility.

Systematic Web Crawling

Crawlers from Google regularly browse the web, following links from one page to the next to find and index information.

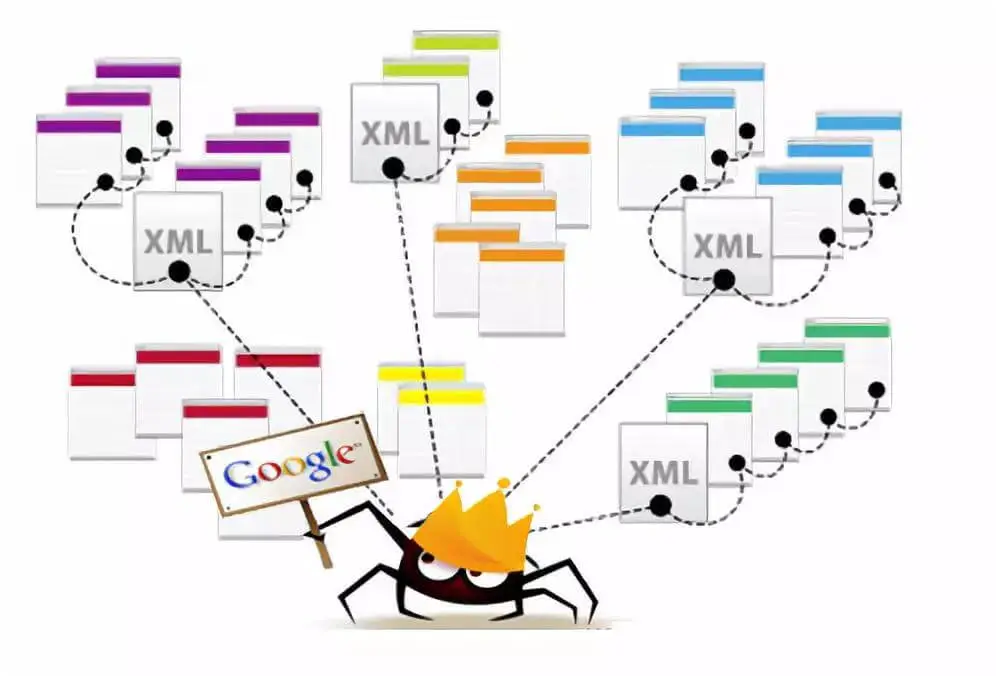

Indexing Relevant Content

Google gives more weight to indexing material that is relevant and trustworthy based on metrics like quality, user engagement, and relevance.

Discovering New Pages

Googlebot is always searching the internet for new pages. Website owners send links and sitemaps that help it find new pages.

Updating Index Regular

Google’s crawlers go back to the index often and make changes to it so that it always includes the newest and most relevant web content.

How Can Marketers Ensure Their Content is Effectively Discovered and Crawled by Googlebot?

Marketers can make it easier for search engines to find and crawl their content by using smart SEO techniques. Ensure correct website structure, submit sitemaps, create quality content, optimize meta tags, and build trustworthy backlinks. Following Google’s guidelines enhances content visibility and boosts Googlebot’s discovery and crawling. Adhering to these practices maximizes the chances of content indexing and improves search engine rankings.

Optimize Website Structure

Marketers should set up their website’s content in a way that makes sense and has clear access so that Googlebot can easily find it and Google’s Crawling it.

Submit Sitemaps to Google

To make it easier for Googlebot to find and index all of your website’s important pages, marketers should send XML sitemaps to Google Search Console.

Create High-Quality Content

Marketers should focus on making original, useful, and interesting material that users will want to read and that Google’s crawlers will notice.

Build Authoritative Backlinks

Building high-quality backlinks from trustworthy websites in their niche is something that marketers should do on a regular basis. These signs help Googlebot figure out how important and relevant their content is.

Conclusion

In conclusion, To fully understand the complicated workings of search engine optimization (SEO), it’s necessary to figure out how Google’s Crawling websites. Website owners, marketers, and professionals in SEO will learn a lot from this deep dive into SEO that will help them improve their online presence. By breaking down Google’s complicated search systems, we give people the tools they need to become more visible online and achieve SEO perfection.